Adaptive Multi-View Path Tracing

Basile Fraboni Jean-Claude Iehl Vincent Nivoliers Guillaume Bouchard

Abstract

Rendering photo-realistic image sequences using path tracing and Monte Carlo integration often requires sampling a large number of paths to get converged results. In the context of rendering multiple views or animated sequences, such sampling can be highly redundant. Several methods have been developed to share sampled paths between spatially or temporarily similar views. However, such sharing is challenging since it can lead to bias in the final images. Our contribution is a Monte Carlo sampling technique which generates paths, taking into account several cameras. First, we sample the scene from all the cameras to generate hit points. Then, an importance sampling technique generates bouncing directions which are shared by a subset of cameras. This set of hit points and bouncing directions is then used within a regular path tracing solution. For animated scenes, paths remain valid for a fixed time only, but sharing can still occur between cameras as long as their exposure time intervals overlap. We show that our technique generates less noise than regular path tracing and does not introduce noticeable bias.

Cite

@inproceedings{fraboni:hal-02279950,

TITLE = {{Adaptive multi-view path tracing}},

AUTHOR = {Fraboni, Basile and Iehl, Jean-Claude and Nivoliers, Vincent and Bouchard, Guillaume},

URL = {https://hal.archives-ouvertes.fr/hal-02279950},

BOOKTITLE = {{EGSR 2019 Eurographics Symposium on Rendering}},

ADDRESS = {Strasbourg, France},

ORGANIZATION = {{Tamy Boubekeur and Pradeep Sen}},

YEAR = {2019},

KEYWORDS = {Computer graphics ; Ray tracing ; Visibility},

PDF = {https://hal.archives-ouvertes.fr/hal-02279950/file/paper.pdf},

HAL_ID = {hal-02279950},

HAL_VERSION = {v1},

}

Example sequence #1

The following sequence is made of 100 frames. The sequence is a shot extracted from the Blender open movie Agent 327 made freely available by the Blender Institute. The standard method renders each frame independently. Our methods jointly renders all the frames, and shares paths between frames with overlapping exposure intervals. Our algorithm both reduces the variance and the perceived flickering for the same computation budget.

Total time budget 4000 seconds (40 seconds / frame) for the whole sequence

| frame 10 | frame 12 | frame 14 | frame 16 | frame 18 |

|

|

|

|

|

|

|

|

|

|

| frame 20 | frame 22 | frame 24 | frame 26 | frame 28 |

Video comparison

Single frame comparison

Example sequence #2

The following sequence shows 100 frames of a custom Cornell Box, with fast moving cubes and glossy materials. This sequence is complex to render since cameras may see tangent geometry while cubes are moving, which implies large variations of the Jacobian and temporal visibility changes. The flickering reduction is particularly visible on the ceiling.

Example sequence #3

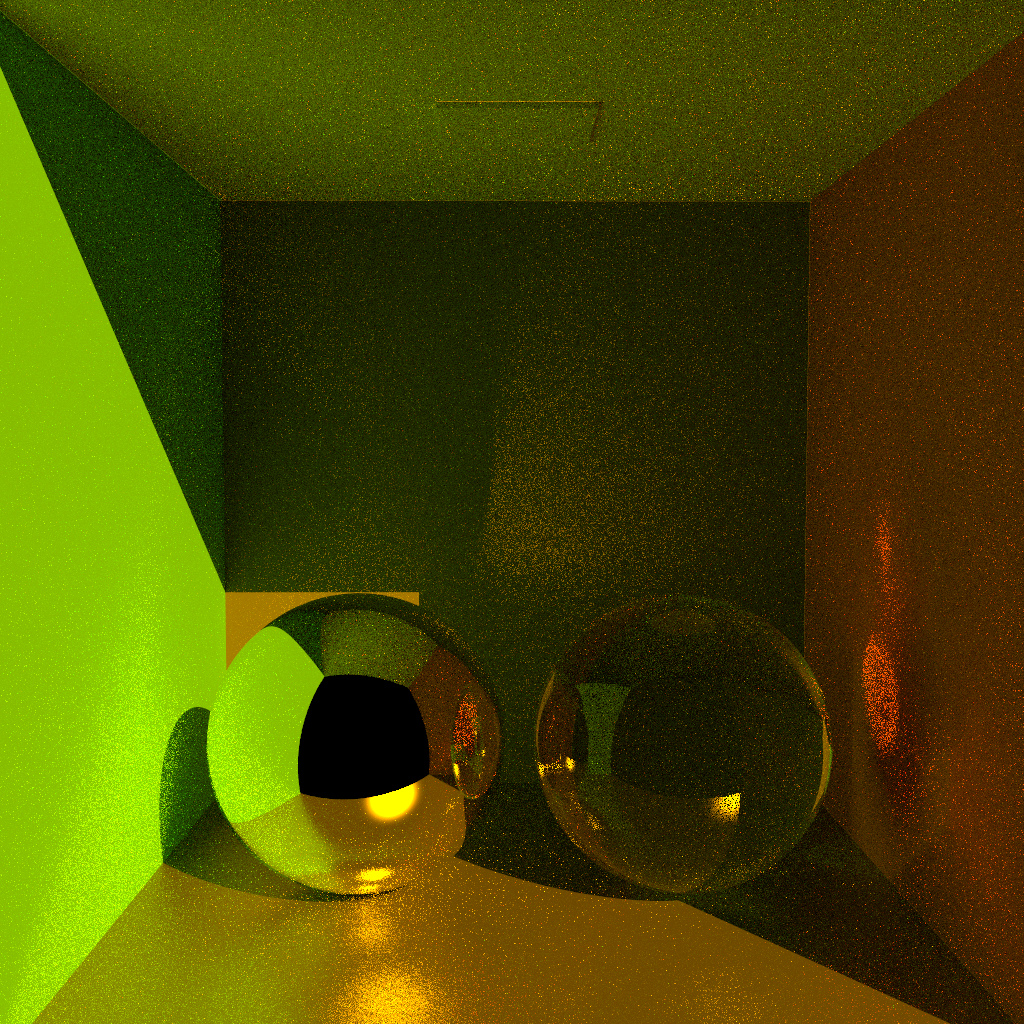

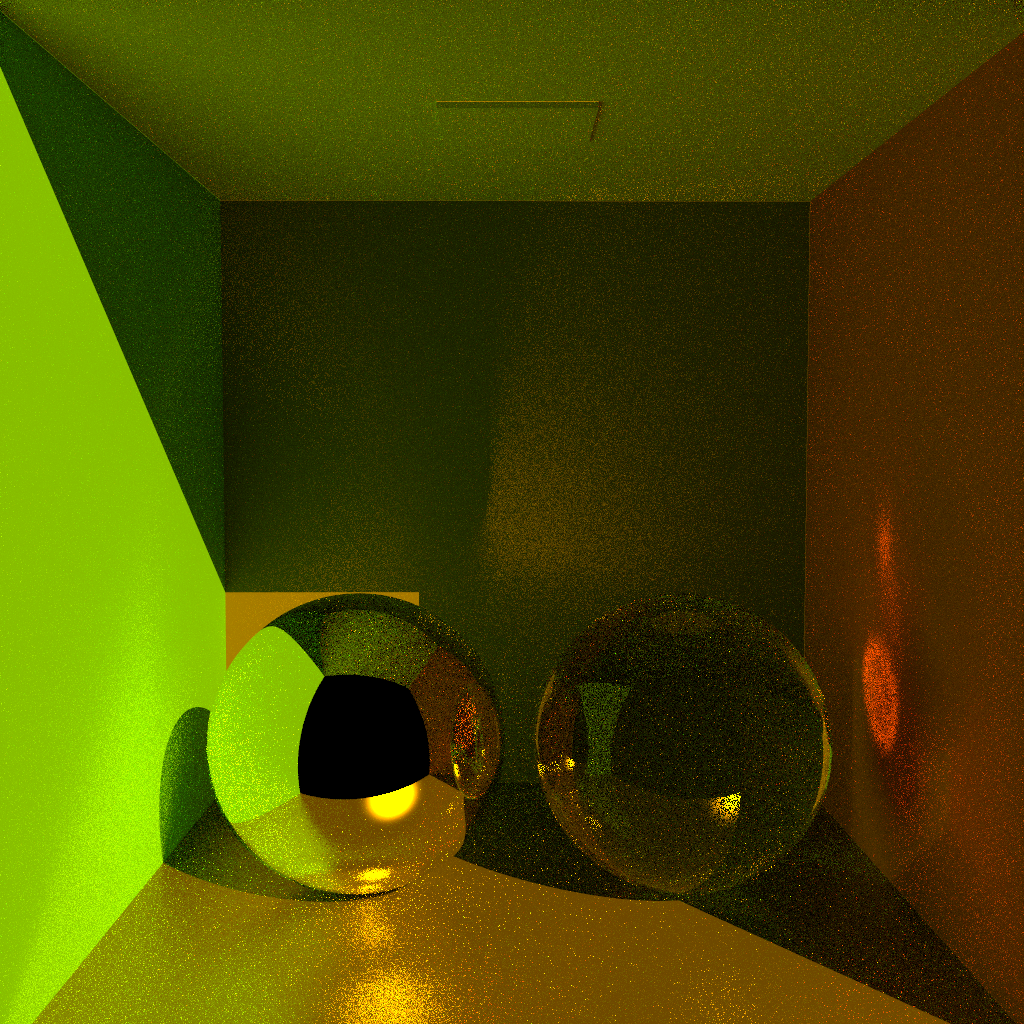

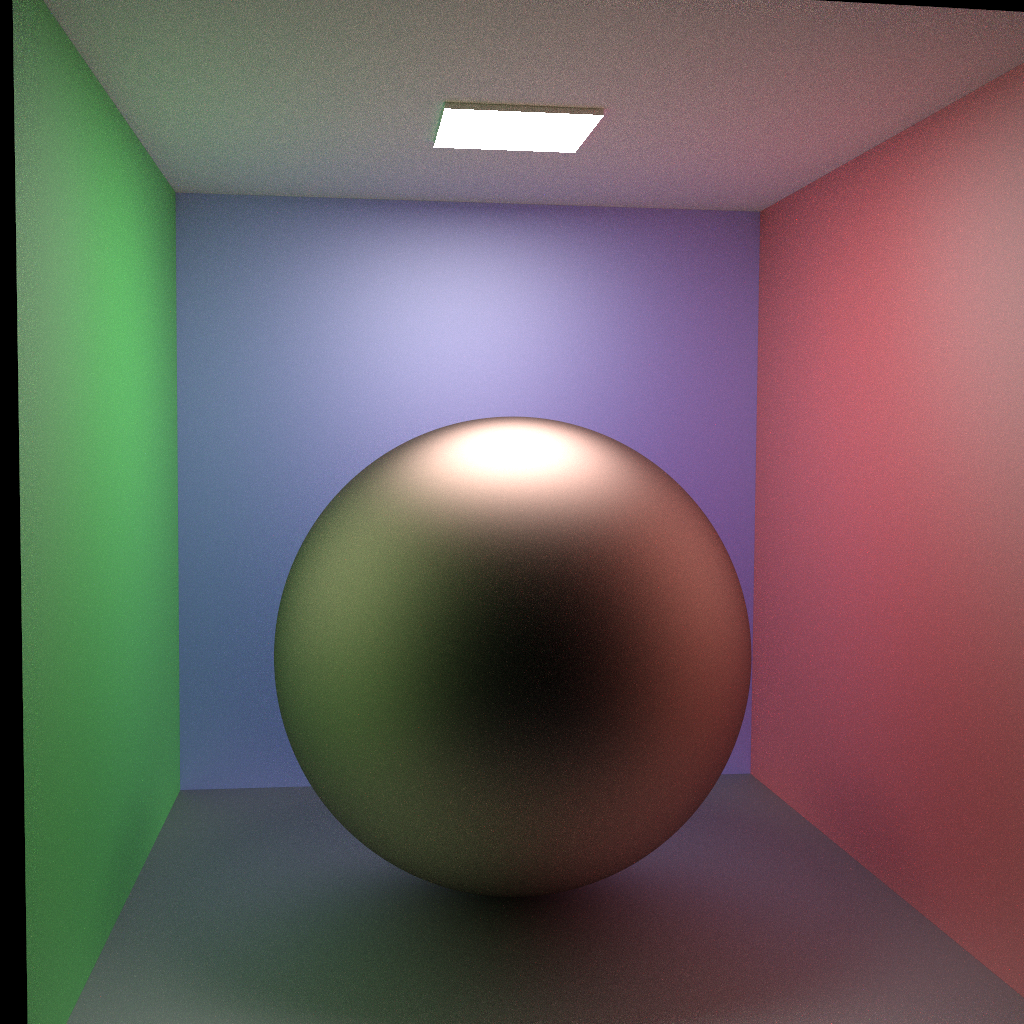

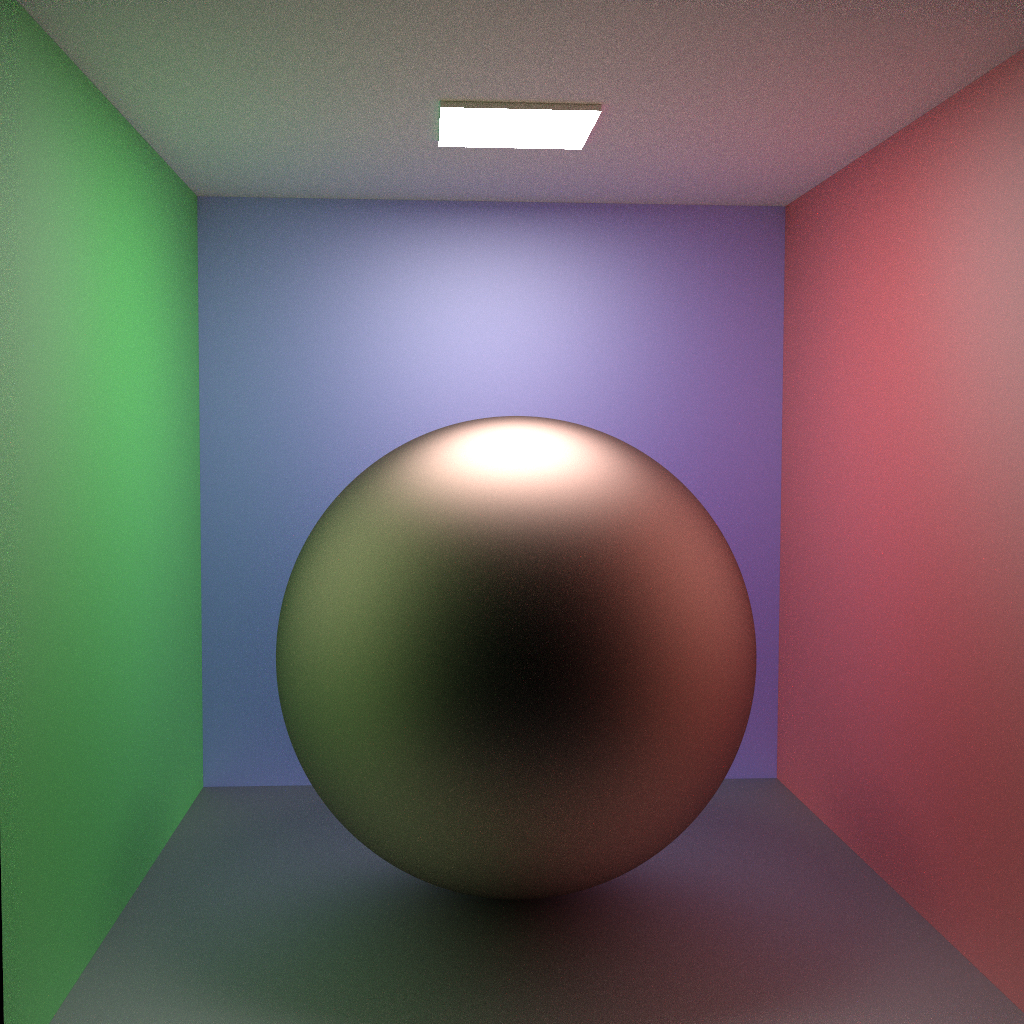

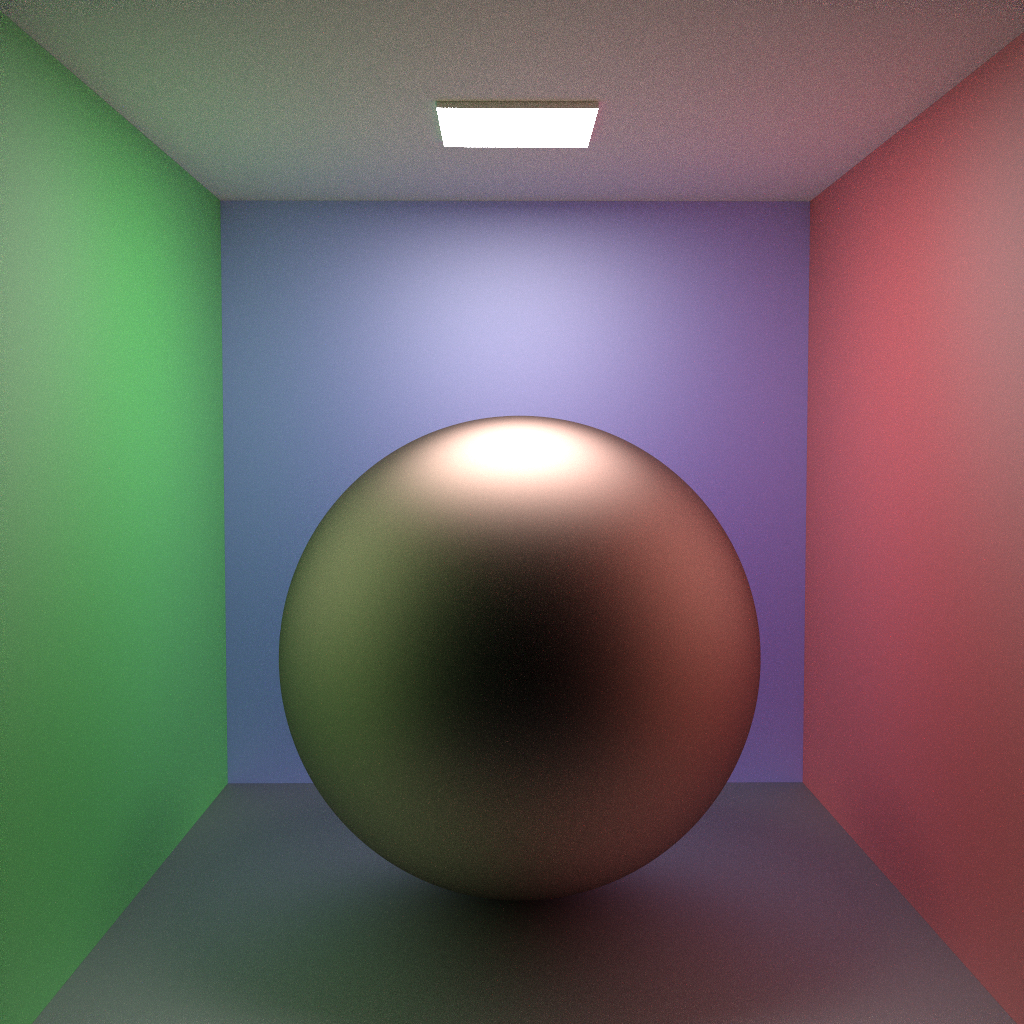

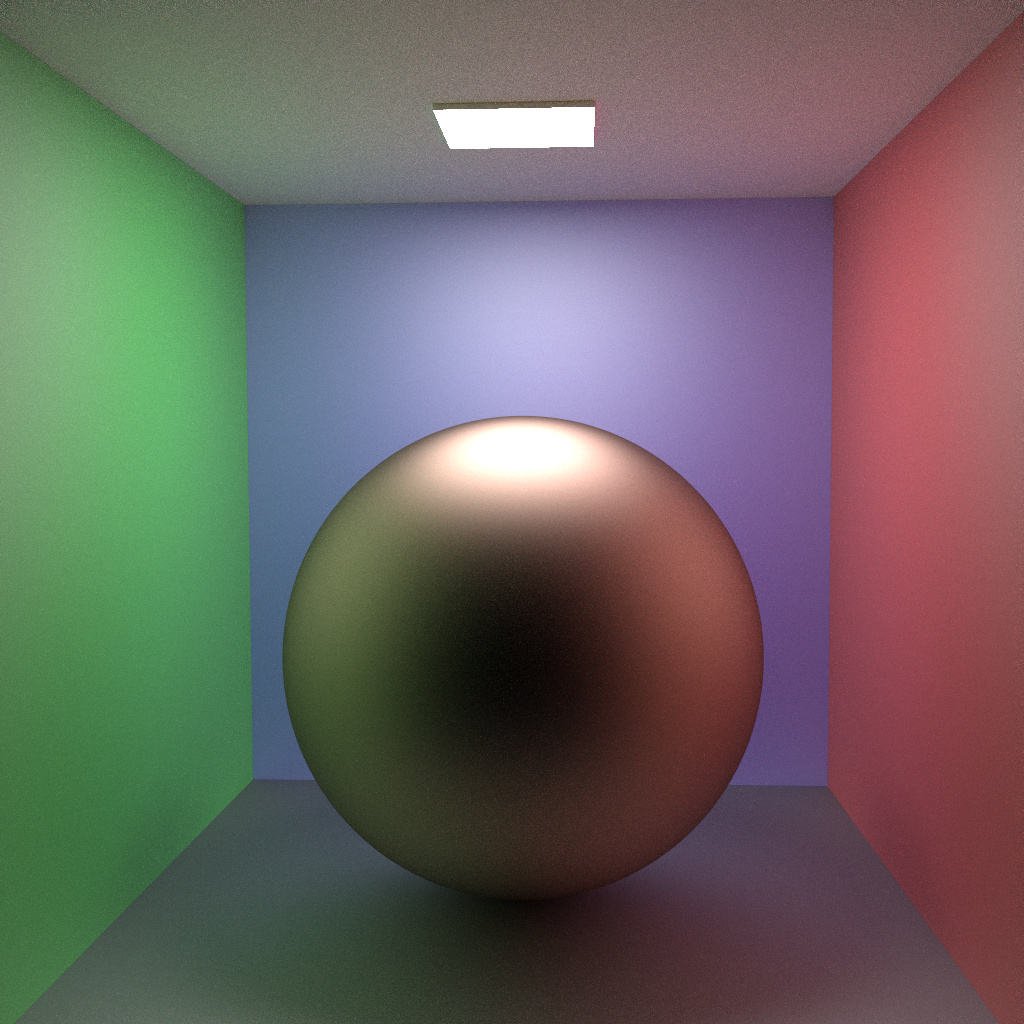

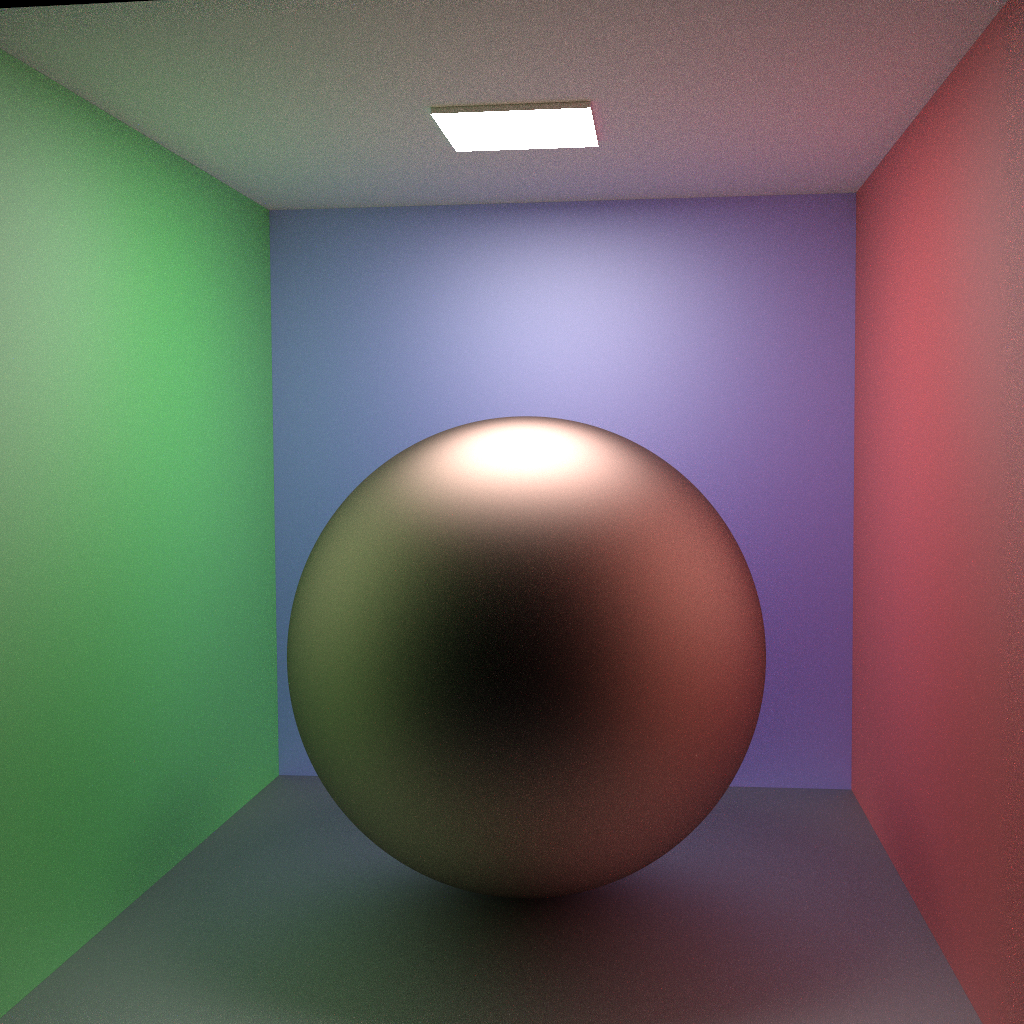

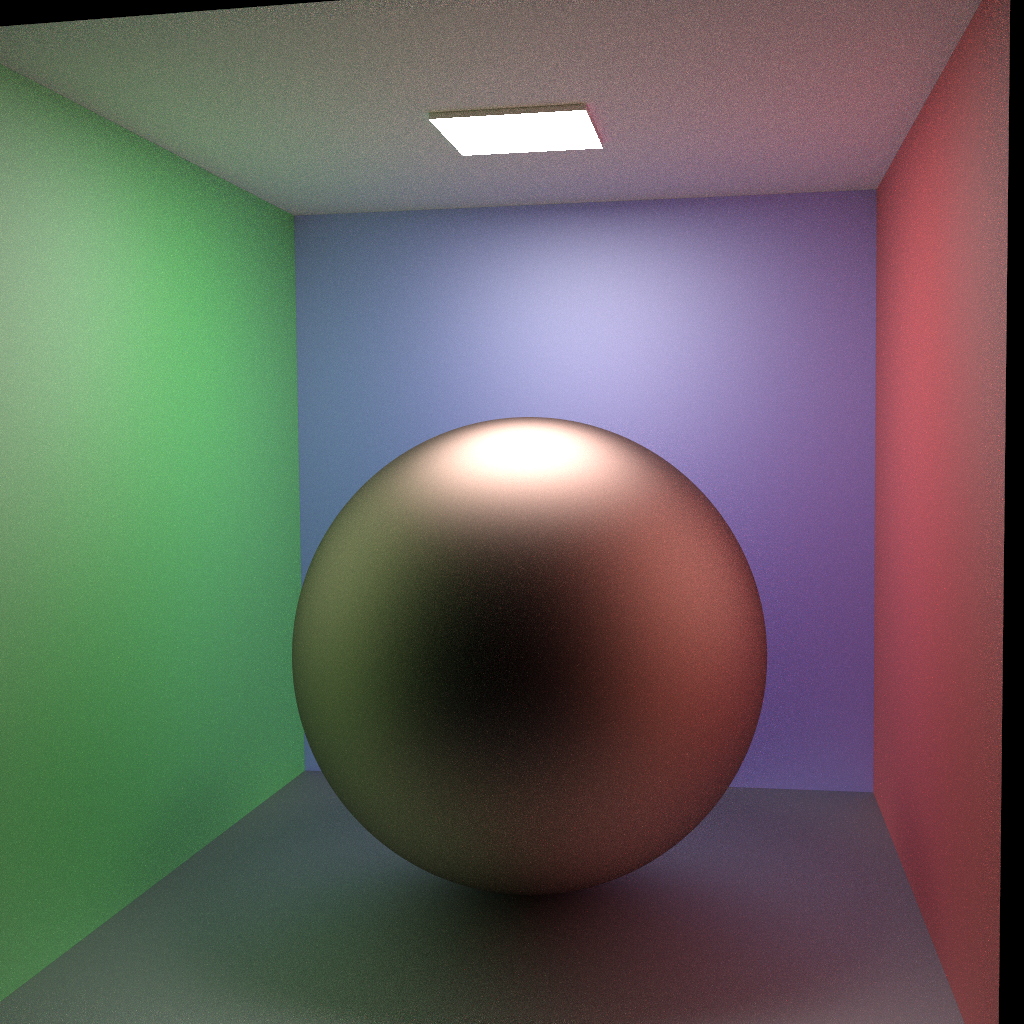

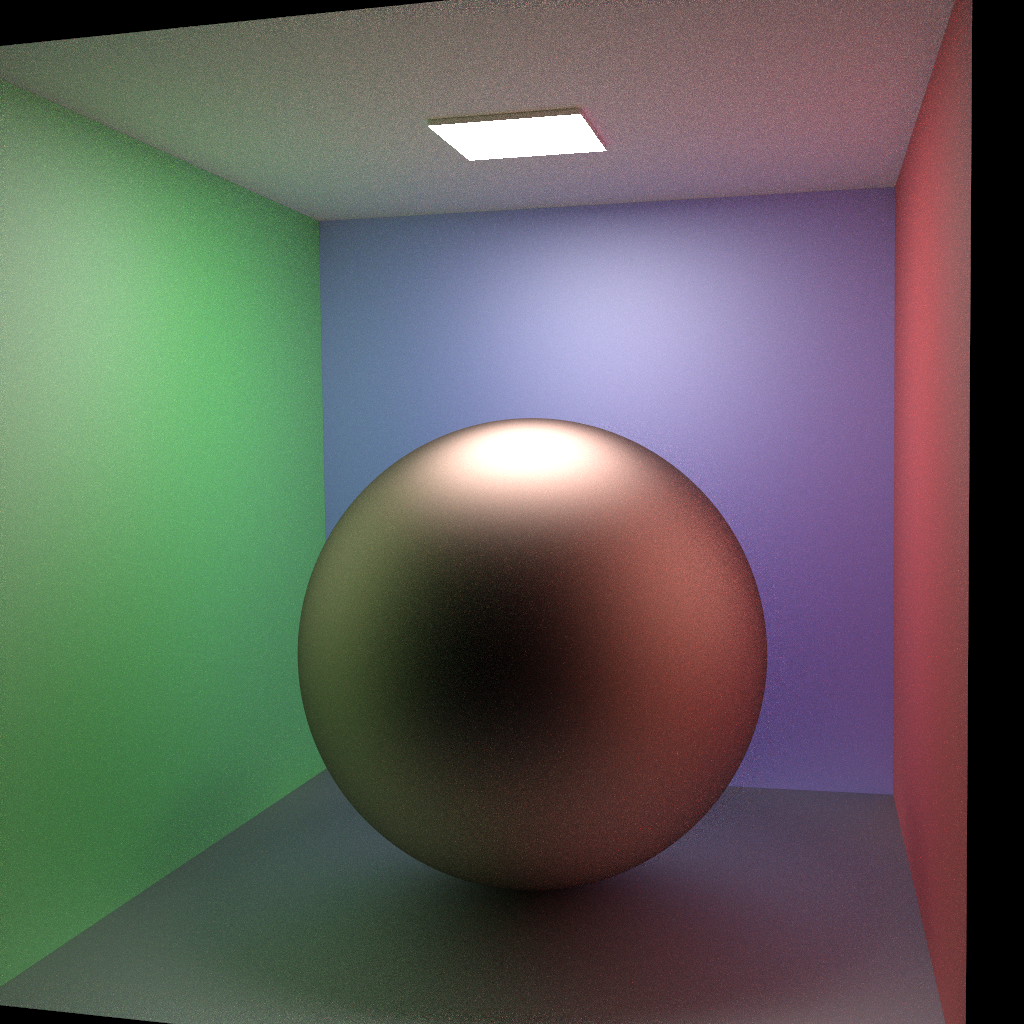

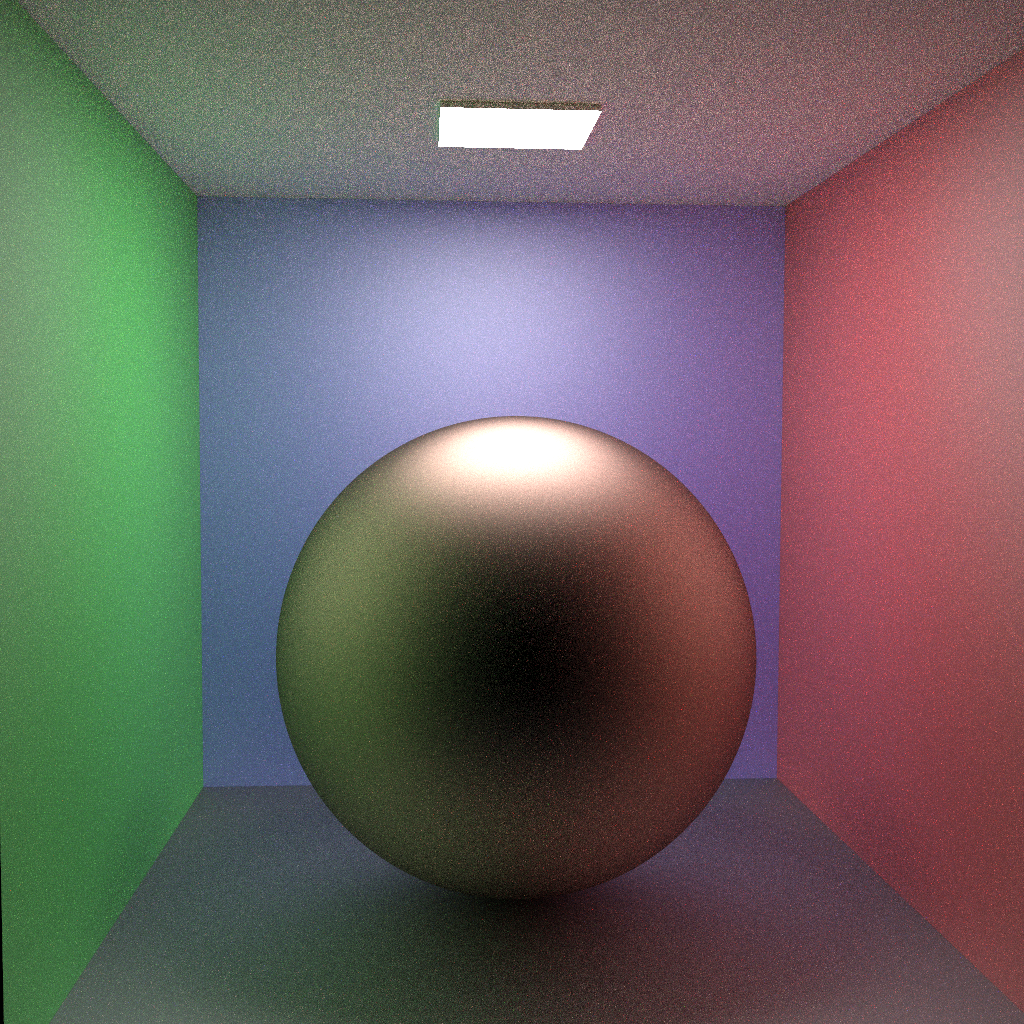

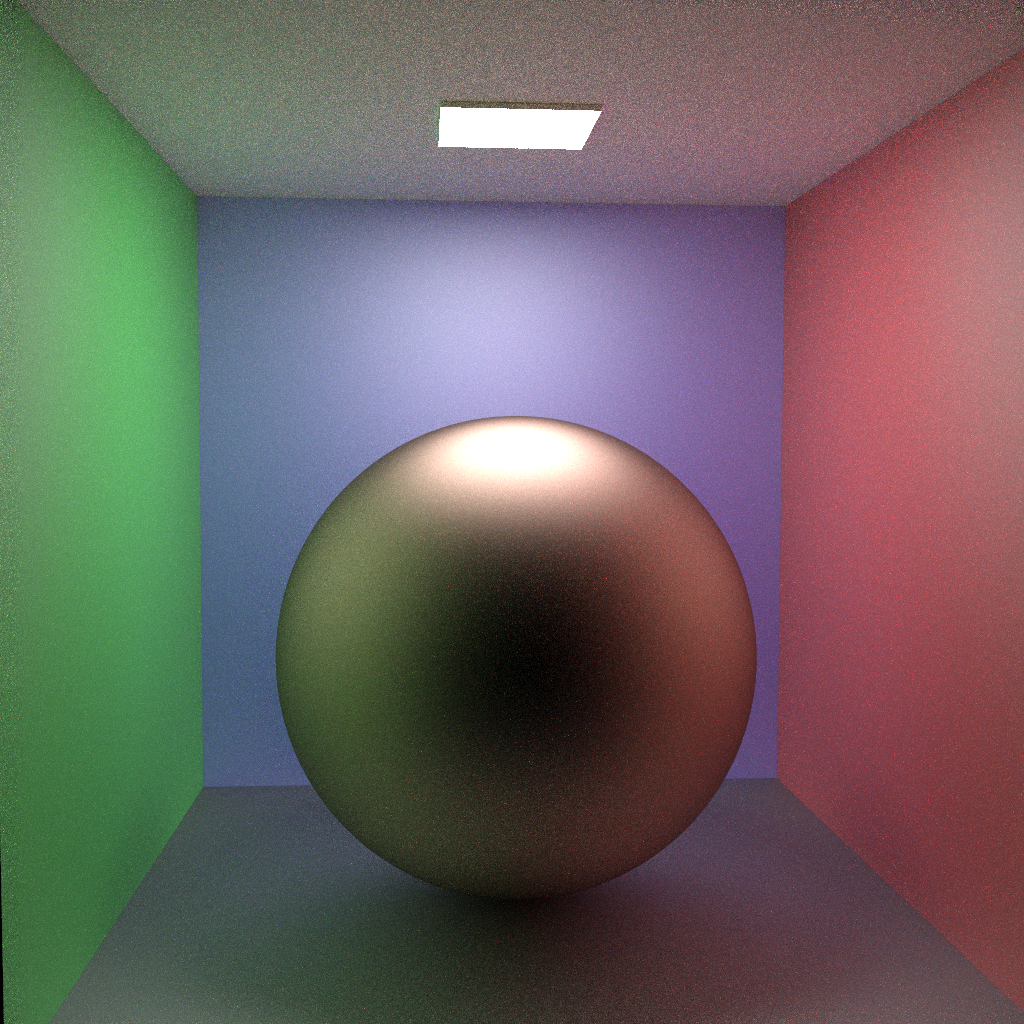

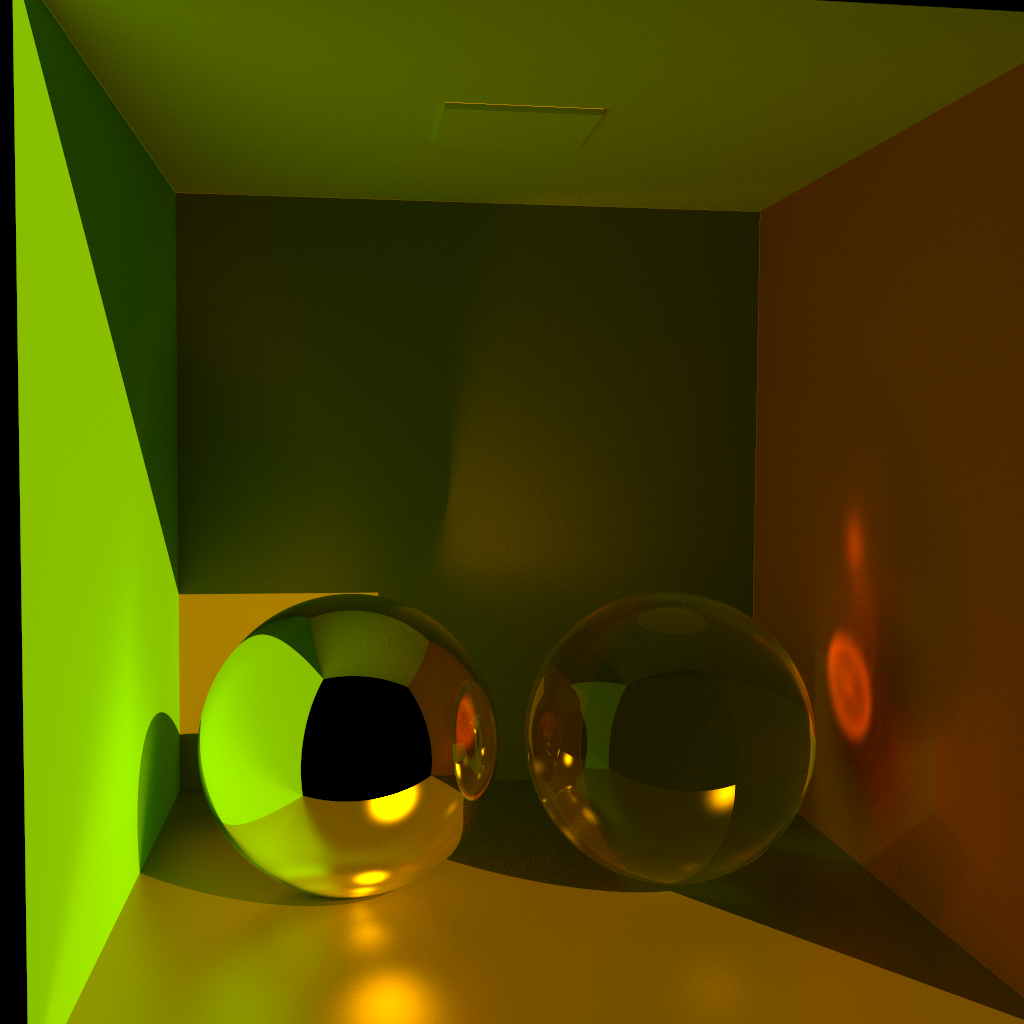

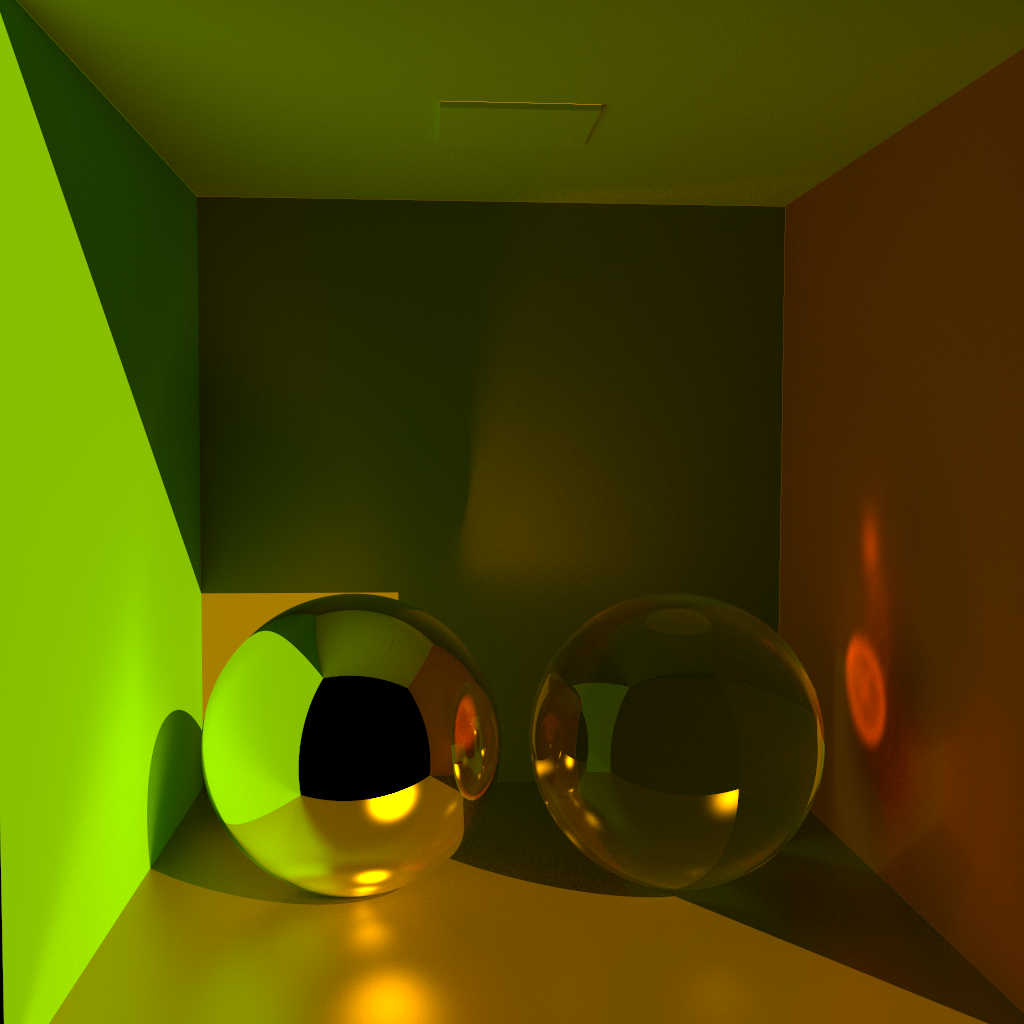

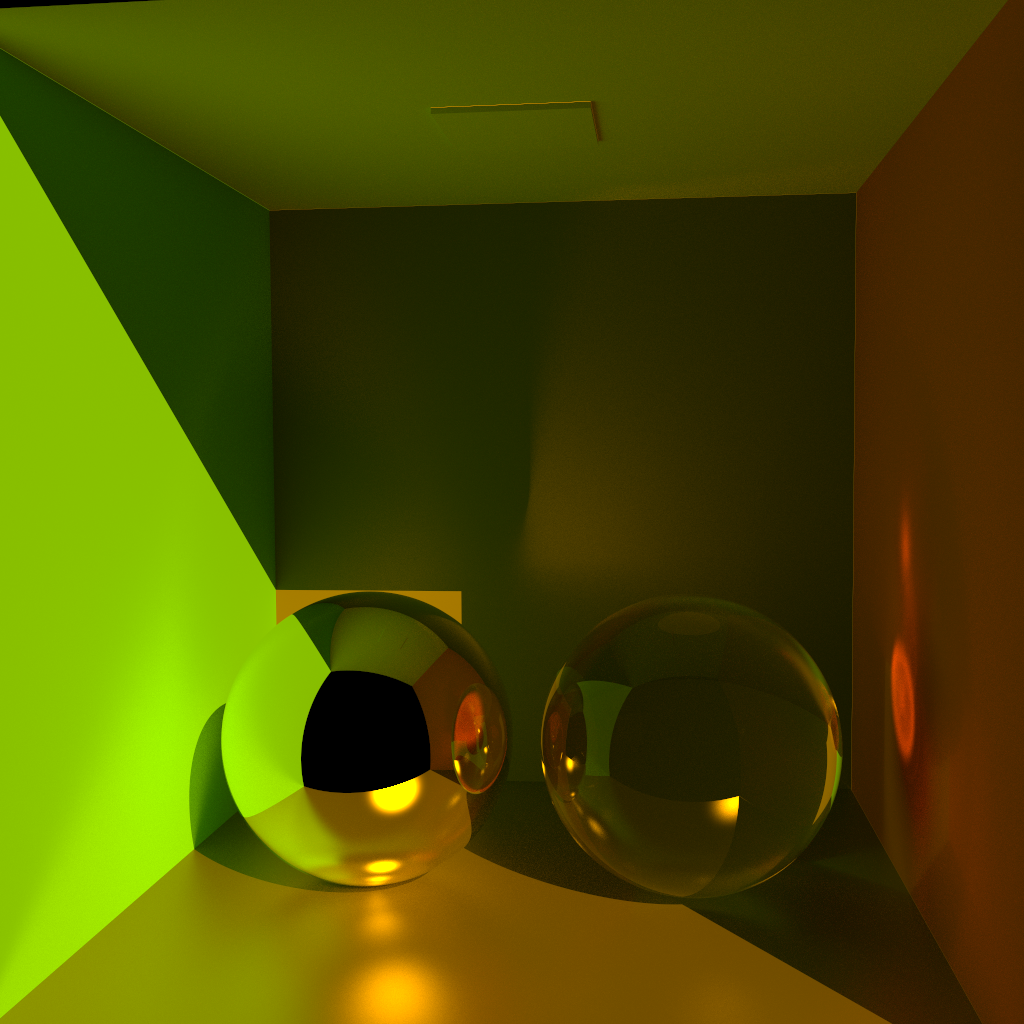

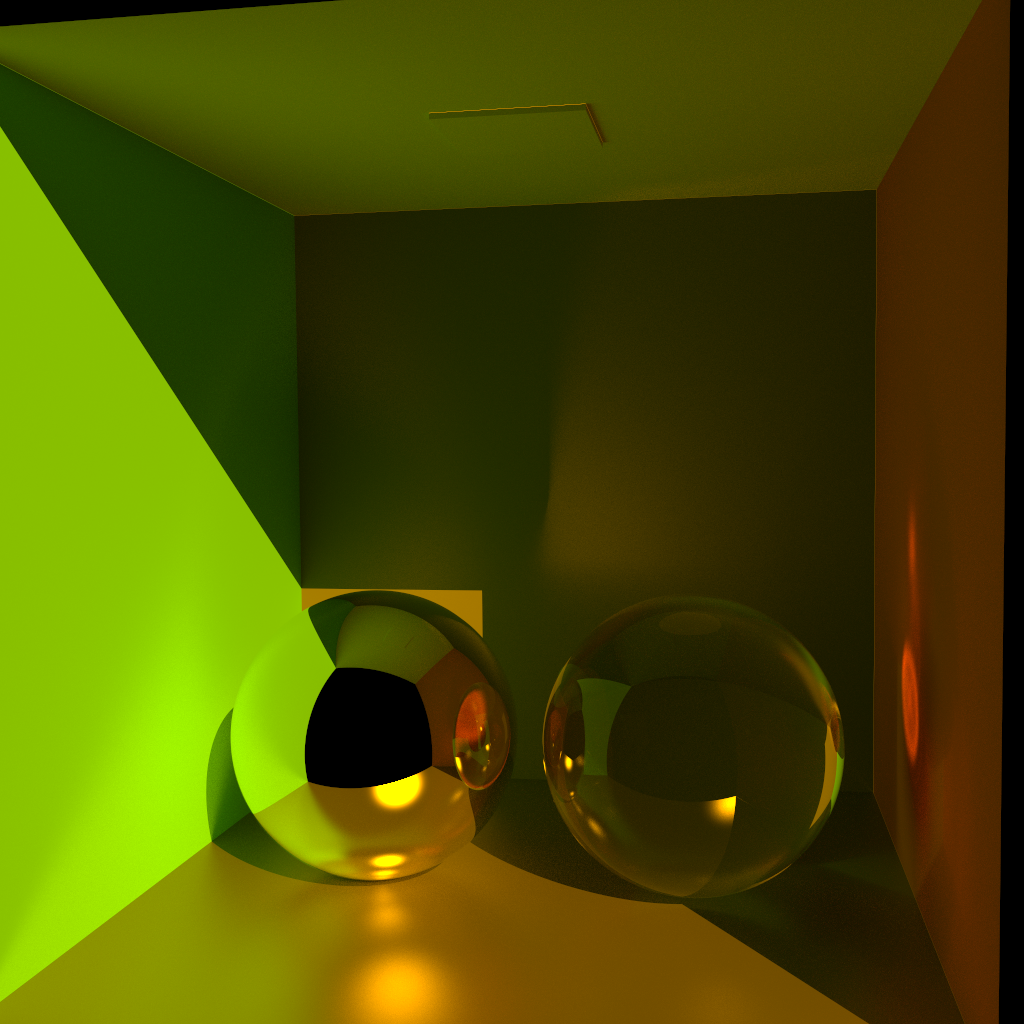

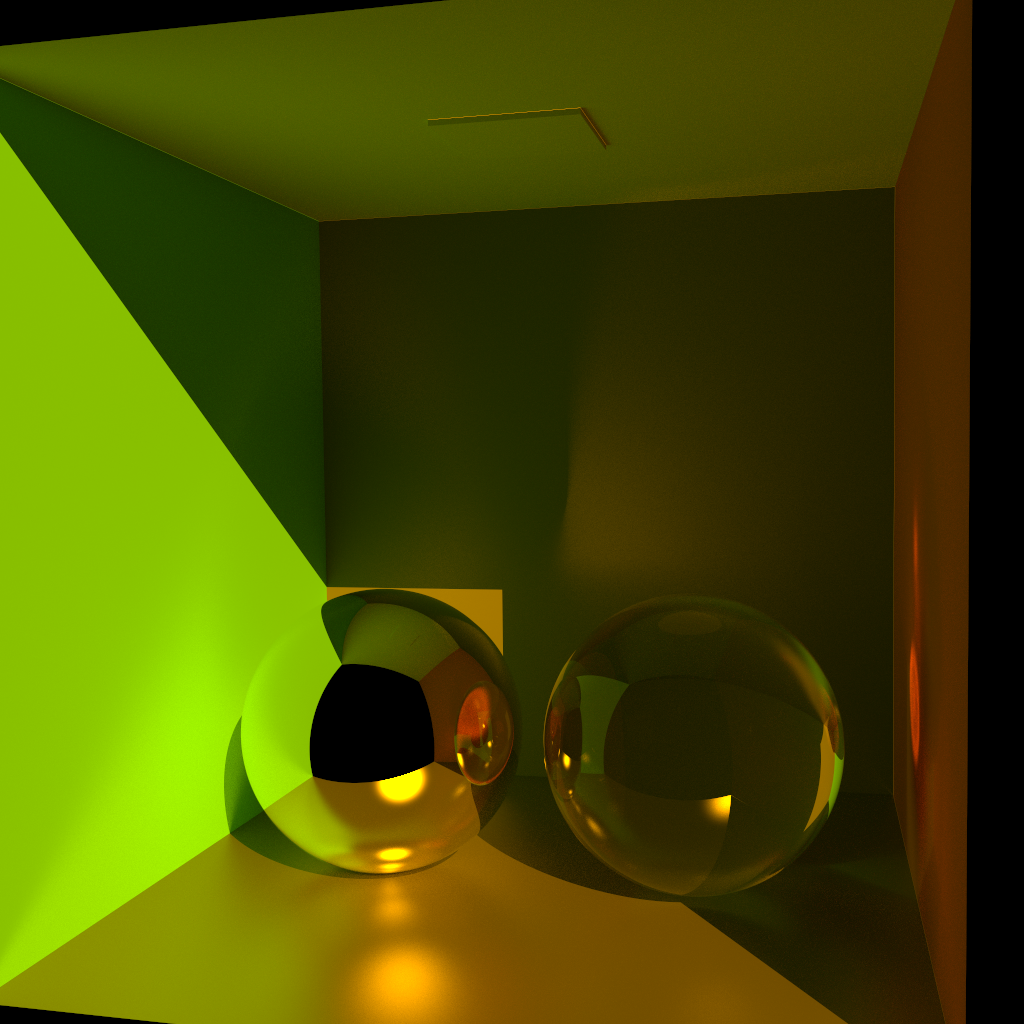

Comparison on a 9 images sequence of a static scene with slightly glossy materials.

|

|

|

|

|

|

|

|

|

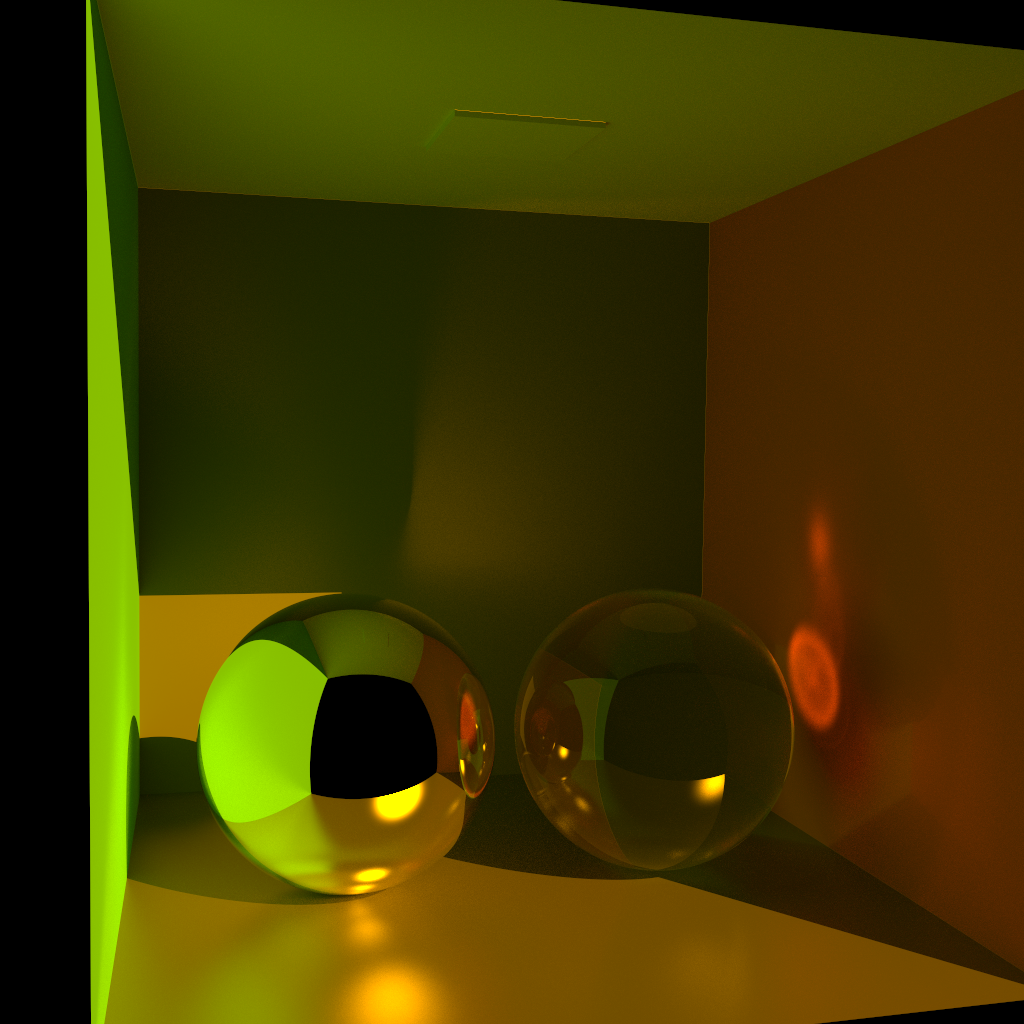

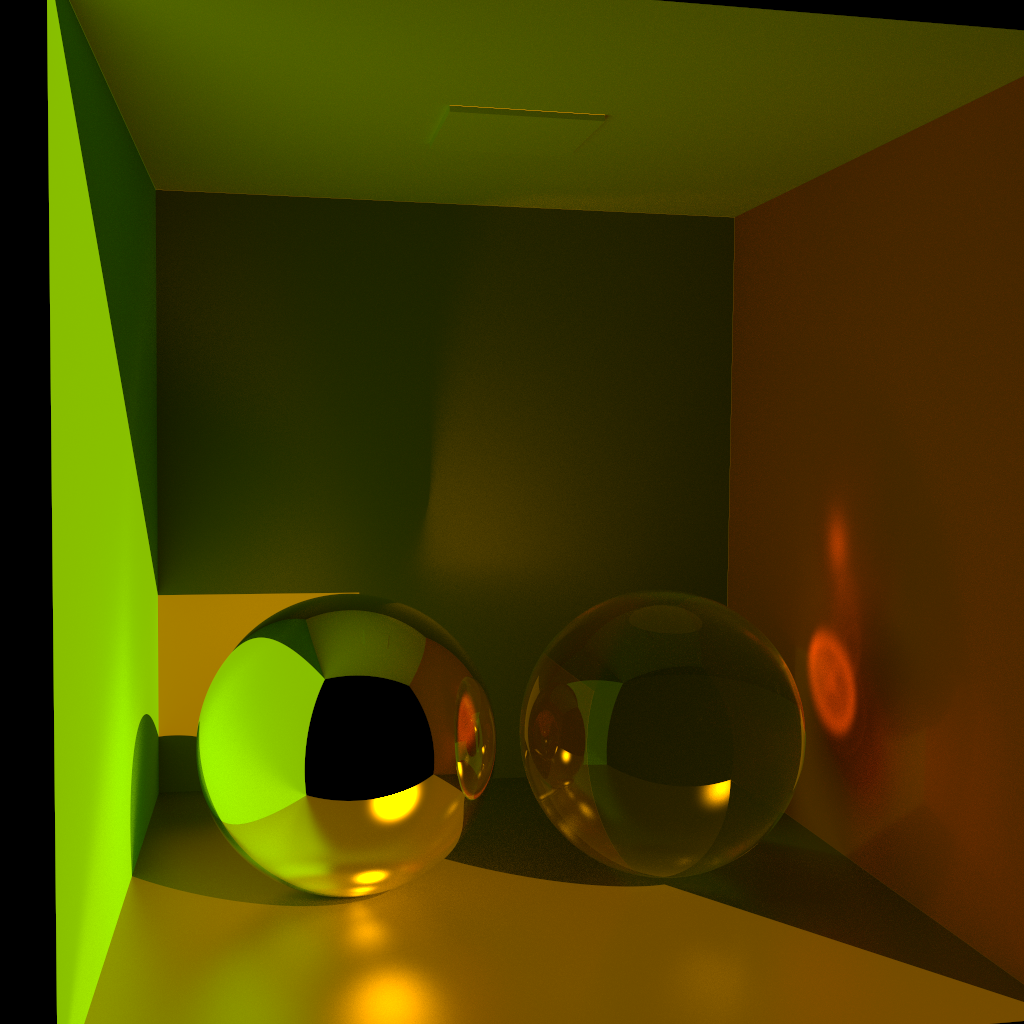

Example sequence #4

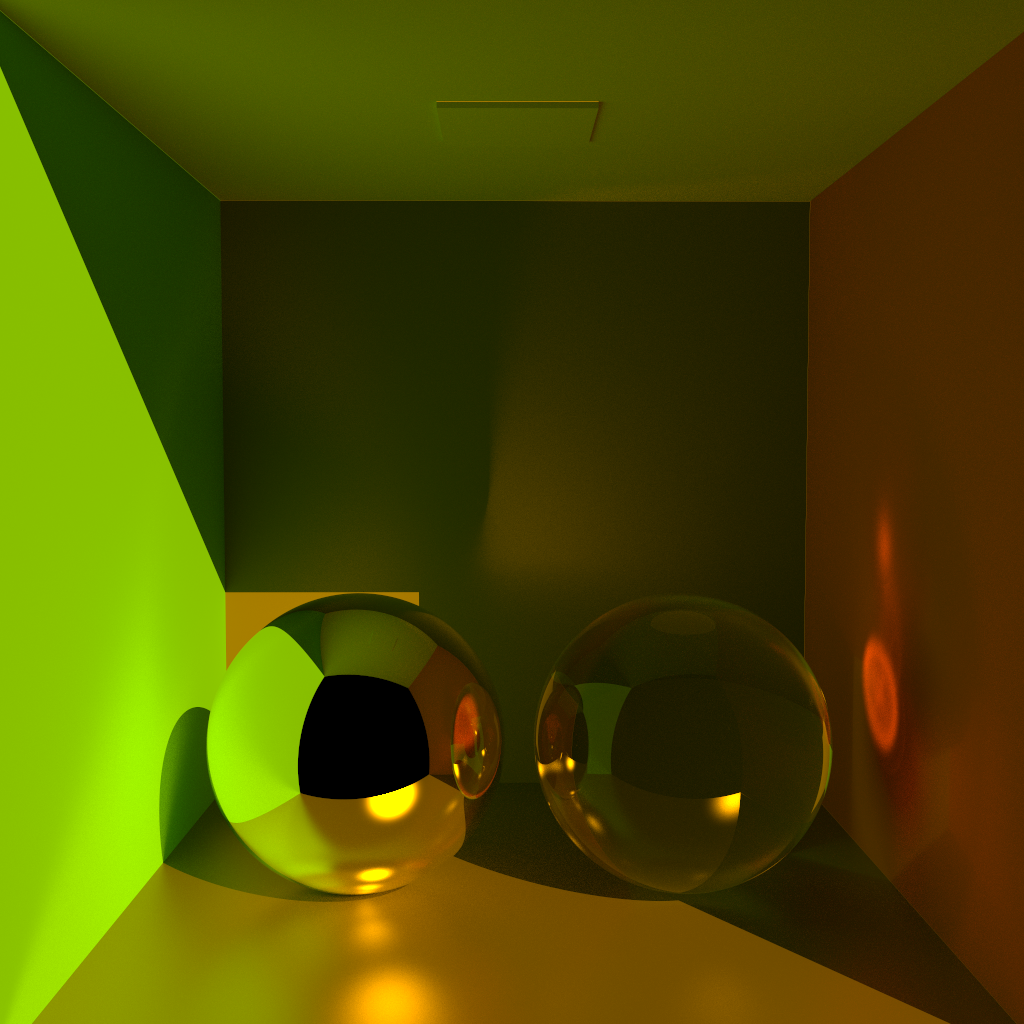

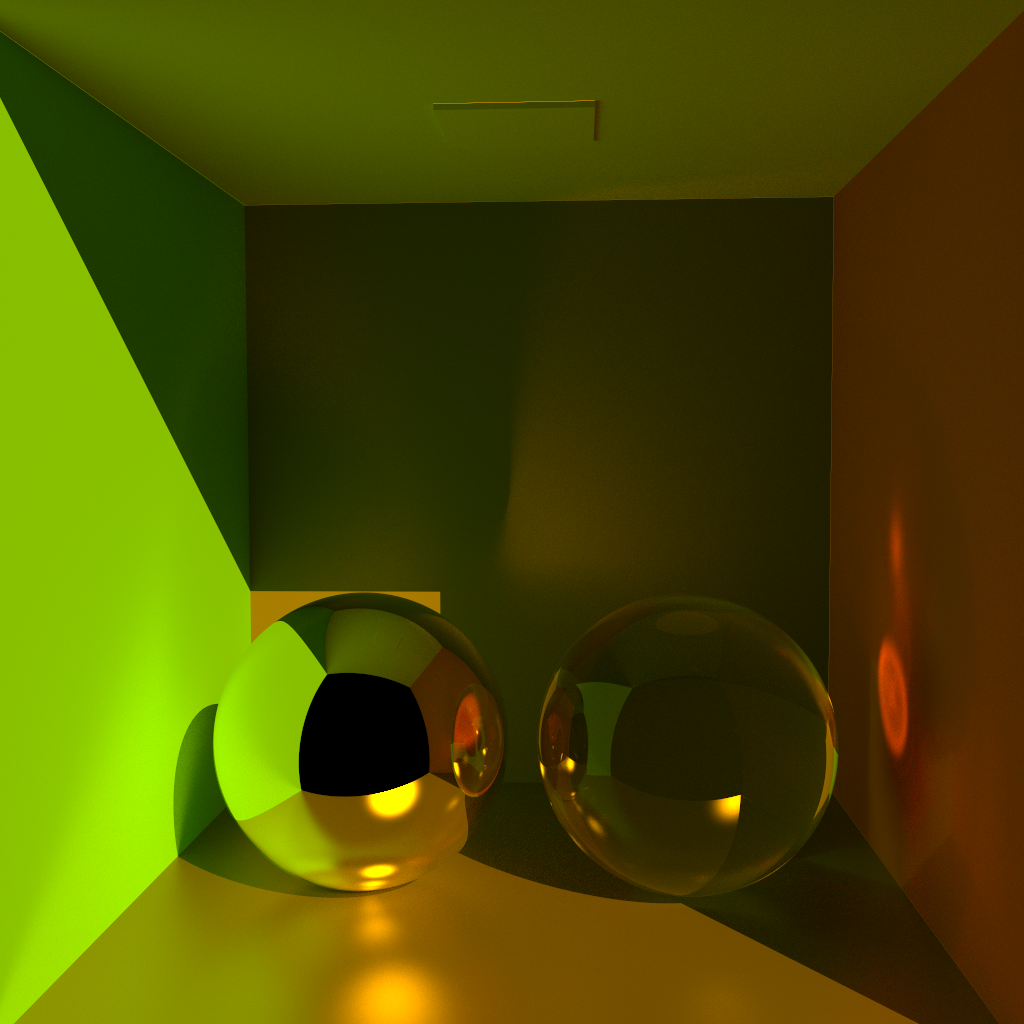

Comparison on a 9 images sequence (scene 0).

|

|

|

|

|

|

|

|

|